As mission, environment, or threat complexity increases, uncrewed maritime systems (UMS) need to be able to convert large amounts of data into actionable information. This information can be provided to on-board autonomy to adapt plans, or act as a decision aid to users. Improved understanding of the domain allows crewed and uncrewed systems to effectively collaborate, creating dynamic teams that can share relevant information and explain their actions.

Uncrewed systems can be equipped with a large variety of sensors, including visual, acoustic, laser and magnetic, and these produce data in a variety of formats. Modern data management frameworks allow this data to be stored intelligently using common data standards. Modular open architecture concepts allow state-of-the-art perception modules to retrieve and process this data into actionable information.

Our approach

When early UMS operations first highlighted the challenge of analysing large volumes of mission data, SeeByte began developing ATR algorithms for side-scan sonar data. This evolved into the development of generic target detection, classification and tracking algorithms across multiple sensor types including; real-time pipe tracking, multi-beam sonar tracking, and optical video processing. These systems helped analyse large volumes of data across a broad range of applications, and are also used to provide scene awareness for autonomous systems carrying out precision inspection tasks.

Deep Learning

Mostly recently Deep Learning technology has revolutionised intelligent perception where large data volumes are available. We have applied the latest generation of deep learning algorithms for automated detection and classification tasks, and are applying state-of-the art deep learning approaches for realistic synthetic sensor data generation. We now offer a broad range of tools that include automatic target recognition, environmental characterisation, performance estimation and training tools as well as synthetic environments for UxV, including sensor data generation models.

Trusted Modules

- Our perception products build on a common data management framework, allowing perception models to be rapidly applied to new applications and sensors.

- Data-driven approaches allow users to adapt and improve the system as new data becomes available.

- Flexible configuration management approaches allow users to configure systems for specific problem sets.

- Our pioneering work in synthetic data generation allows accurate statistical estimation of both the perception algorithm and the user's ability to analyse data.

- Innovative training technology, based on advanced vehicle and sensor simulation, allows users to train on the use of uncrewed systems under realistic scenarios without leaving the office.

Scaling to different platforms

Fully supported Software Development Kits (SDK) reduce transition times by integrating third-party hardware, or software modules. This enables vendors to rapidly integrate their platforms, and users to take advantage of each of the platform’s unique capabilities.

Case Studies

Overview

In exclusive collaboration with SeeByte, i-Tech 7, a Subsea 7 company, and a leading Life of Field partner who supply integrated products and services throughout the oil & gas industry, have developed the Autonomous Inspection Vehicle (AIV), the most advanced, fully autonomous, hovering vehicle in the subsea market.

Technology

Our software for AIV is designed to dynamically control this unique hover-capable vehicle, including the sensor processing, embedded autonomy and high-level mission planning.

Result

A fielded software stack that goes from low level thruster control through complex autonomy behaviours that enable a small hover capable AUV to operate at depths of up to 3000m for 24h in the complex infield environment without human intervention.

Autonomous Inspection Vehicle (AIV)

Overview

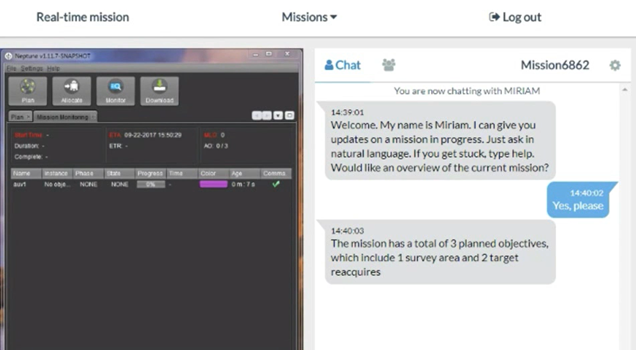

Dstl’s MIRIAM project developed a system that sits between the human and the autonomous systems that can field questions and report back in natural language. This can either be through a chat-style interface, or through a text-to-speech interface

Demo

MIRIAM was jointly developed by SeeByte and Heriot-Watt University, and was demonstrated during an ORCA Hub event with various uncrewed systems integrated.

Result

A conversational assistant, like Siri or Alexa, that supports human machine teaming in complex environments to build operator trust when multiple autonomous systems are operating in a team.

MIRIAM Interface